Artificial Intelligence With Patients in Mind: A New Governance Blueprint for Responsible AI in Pharma

July 2025

Executive summary

Artificial intelligence (“AI”) is already transforming how patients seek information, connect with communities, and engage with healthcare. AI technologies shorten the distance from innovation to patient benefit, from faster drug discovery and development to earlier disease detection and patient access. For example, pharmaceutical companies including Amgen, Bayer, and Novartis are training AI to scan billions of public health records, prescription data, medical insurance claims and their internal data to find clinical trial patients, in some cases reducing the time it takes to sign participants up by 50%.¹

Patient advocacy groups (“PAGs”), such as the Crohn’s & Colitis Foundation, are also leveraging AI technology to support clinical trial recruitment and accelerate drug discovery and development via tools like CytoReason.² Demonstrating the broad potential of AI for patients, the National Psoriasis Foundation (NPF) is partnering with Mindera Health to advance personalized psoriasis treatment through the Mind.Px test—a painless, AI-powered diagnostic that predicts drug response using RNA analysis—enhancing treatment precision, lowering costs, and accelerating patient access to effective therapies.³

For pharma, this creates both an opportunity and an obligation: to harness AI to enhance patient engagement while safeguarding trust, equity, and human connection. Pharma companies that act now to build responsible, patient-centered AI strategies in collaboration with advocacy organizations will not only improve outcomes but also strengthen reputation and credibility in an era of growing digital skepticism.

Several leading health authorities, medical societies, patient advocacy organizations, and industry consortia have identified key principles for responsible AI use in healthcare, including recent efforts by The Joint Commission, Coalition for Health AI (CHAI), the Alliance for Artificial Intelligence in Healthcare (AAIH), and the National Academy of Medicine (NAM). Notably, patient advocacy organizations like The Light Collective are contributing patient-centered governance frameworks, yet pharma’s adoption of these principles remains uneven, highlighting the need for stronger integration of patient advocacy leadership in AI oversight and policy.

While all of these organizations work together to establish governance principles based on patient rights, most pharmaceutical companies have yet to adopt and apply these principles consistently in the implementation of AI technologies across their organizations.

DKI Health’s perspective on the responsible use of AI in pharma is shaped by our understanding of the current use cases across both pharma and PAGs, the opportunities and challenges that exist, and the evolving patient-centered principles guiding AI adoption by industry. There is a growing recognition that patient voices must be central in the governance, development, and deployment of AI technologies in healthcare that are intended to serve them.

DKI Health met with our Patient and Caregiver Advisory Council to learn from their experiences and understand how they are exploring AI technology to enhance their own advocacy efforts.

We discovered that while pharma is investing heavily in AI for Research & Development (“R&D”), Medical Affairs, Patient Engagement and other functions, most PAGs, especially local community-based organizations, risk being excluded from these innovations.

This gap undermines the authenticity and impact of AI-driven initiatives for patients.

AI Use Cases for Patient Advocacy Groups and Pharma

As part of our research into how AI is being used to benefit patients, we explored current use cases among PAGs and pharmaceutical companies. Through both secondary and primary market research, we uncovered meaningful opportunities for PAGs and pharma to work together to advance patient-centered innovations.

Most PAGs are still in the early stages of evaluating AI’s potential to support their missions. Current applications include disease detection, clinical trial optimization, content development, patient support and education, and operational/administrative support. Common tools in use include ChatGPT, Microsoft CoPilot, and bespoke platforms customized to meet specific organizational needs. (Exhibit A – Current AI Uses and Tools by PAGs).

One example of peer-to-peer alignment in AI adoption is the collaboration between the National Bleeding Disorders Foundation (NBDF) and the American Thrombosis and Hemostasis Network (ATHN), who are working together to apply patient data analysis across Hemophilia Treatment Centers (HTCs).⁴’⁵ This partnership demonstrates how PAGs often follow the lead of similar organizations within their therapeutic area when considering AI adoption. For pharmaceutical companies seeking to validate their patient-centric AI technologies, partnering with organizations that have established peer-to-peer alignment offers a strategic advantage—enabling broader scale, reach, and trust within the community.

In parallel, pharmaceutical companies are advancing the use of AI technologies across the life sciences value chain, with increased emphasis on patient-centric models enabled by personalized approaches. These technologies are being applied across a wide range of purposes. (Exhibit B – The 7 Patient-Centered Purposes of AI Adoption by Pharma).

The AI in pharma market is valued at $1.94 billion in 2025 and is projected to reach $16.49 billion by 2034, according to a 2025 EY-Microsoft report.⁶ Examples of AI tools and systems currently being used by pharma companies span all major areas of the value chain:

In drug discovery and design, AstraZeneca is using BenevolentAI’s proprietary AI-enabled platform to move five targets into its discovery portfolio in under three years.⁷

In clinical trial optimization, Janssen’s Trials360.ai platform uses machine learning to predict enrollment rates and identify ideal candidates, improving recruitment speed by 30%.⁸

In supply chain and manufacturing, Merck KGaA partners with Aera Technology to apply AI/ML algorithms that improve demand forecasting, sourcing, and capacity planning. This has led to a 90% improvement in forecast accuracy and a boost in hospital customer service levels from 97% to 99.9%.⁹

In 2023, Viz.ai partnered with Bristol Myers Squibb to enhance early disease detection by using AI to analyze routine ECG data and flag individuals who are at risk for hypertrophic cardiomyopathy (HCM), one of the most common but underdiagnosed genetic heart conditions. AI technology is enabling clinicians to detect the disease months or even years earlier.¹⁰

In patient engagement, Biogen launched an AI-powered multiple sclerosis (MS) care community in July 2022 through Happify Health’s Kopa platform, delivering personalized educational content, clinician access, and peer support for patients.¹¹

In patient data analytics, GSK partnered with Tempus to integrate real-world clinical data and molecular insights, equipping oncologists with actionable intelligence to make personalized cancer treatment decisions.¹²

In content creation and campaign development, more than 80% of large life sciences companies report exploring AI to streamline content design, tagging, and quality review (including literature reviews).¹³ Select pharma companies are early adopters of Veeva’s new AI-powered HCP engagement tool for compliant communications and rapid sharing of educational resources.¹⁴ Veeva announced in April 2025 that it will soon use AI to allow compliant content blocks to be reviewed once and then reused across channels, reducing regulatory risks and easing staff workloads.¹⁵

These use cases underscore the growing need for pharma to adopt formalized AI governance frameworks that go beyond internal staff-level policies. There is a clear demand for industry-wide, third-party-validated guidelines to ensure ethical, effective, and responsible use of AI.

While many of the top 20 pharma companies have senior leadership overseeing AI efforts, only a few have appointed formal Chief AI Officers at the C-suite level - notable examples include Pfizer, Lilly, and Merck. Beyond large pharma, small and mid-sized pharmaceutical and MedTech companies—such as Medtronic and Cosmos Pharmaceuticals—are also beginning to elevate AI leadership by establishing executive-level roles dedicated to AI strategy and oversight.¹⁶

Collaboration between PAGs and pharma companies around AI is beginning to take shape, with several examples already in motion. For instance, the Peggy Lillis Foundation has explored partnership opportunities with Recursion to accelerate therapeutic discovery for C. difficile infections.⁴ IAPO contributed to the development of EczemaLess, an AI-powered app designed to help patients track and manage atopic dermatitis with Viatris.¹⁷ ZERO Prostate is developing an AI-enabled prostate cancer patient support and navigation tool for patients, care partners, and at risk communities using SMS texting as part of its “Blitz the Barriers” initiative.⁴ (Exhibit C – Examples of PAG–Pharma AI Collaborations)

In this early stage of collaboration, we have observed that most efforts between PAGs and pharma companies are still rooted in traditional financial sponsorship. However, a more mature phase is beginning to emerge—one where PAGs and pharma companies move beyond transactional funding relationships to co-develop AI tools, policies, and governance frameworks. Pharmaceutical companies that take this next step—working directly with patient groups to co-create strategy, set ethical guardrails, and build meaningful patient engagement tools—will position themselves as industry leaders in ethical, patient-centered innovation.

Opportunities and Challenges

Our Patient and Caregiver Advisory Council also contributed valuable perspectives on both the potential opportunities of AI as well as risks and concerns for patients, which must be addressed for ethical and effective adoption.

Joe Nadglowski, CEO of the Obesity Action Coalition (OAC), highlighted AI’s potential to accelerate clinical validation by leveraging real-world data, reducing both time and risk in comparison to traditional clinical trials. Another opportunity discussed was the ability for AI to empower patients by giving them greater access to information, particularly regarding side effects and drug interactions. This transparency could give patients more control over their health decisions and foster deeper engagement in their own healthcare.

Alisha Lewis, a sickle cell and rural health advocate, emphasized that AI could significantly improve diagnostic accuracy, especially in rare diseases where the conventional symptom-based communication model between patients and clinicians often falls short. Through our research, we learned that pharma companies are exploring how natural language processing (NLP) can help clinicians detect subtle verbal cues that indicate the presence of a rare condition.

Despite this optimism, advocates also raised key concerns.

Arya Singh, an expert patient advocate for spinal muscular atrophy (SMA), called out the lack of disease-specific AI governance policies. This gap appears to be common across most disease areas but is especially urgent in rare disease communities, where trustworthy, specific guidance is already limited.

Another major concern is that AI might be used by payers or industry in ways that erode trust, by diminishing the importance of human touch, or prioritizing cost savings over empathy and access. Some patients, caregivers, and clinicians are responding to this and empowering themselves with AI tools. For example, AI tools such as “Claimable” and “Doximity AI” are helping several individuals living with obesity and other chronic conditions navigate payer strategies that create access barriers, such as prior authorization protocols and cost-containment restrictions.¹⁸

Additionally, while pharmaceutical companies are eager to use AI to improve patient outcomes, our Advisory Council noted that these efforts often lack a trusted, co-designed ethical framework that reflects the values and priorities of patients and PAGs. Without such frameworks, pharma-led AI initiatives risk being perceived as out of touch with real-world patient needs. This sentiment is reinforced by a 2024 PubMed study, which called for regulatory bodies to include AI experts, healthcare professionals, ethicists, and patient advocacy organizations, alongside pharmaceutical companies, in shaping the ethical governance of AI in healthcare.¹⁹

This underscores the importance of cross-stakeholder collaboration. For AI initiatives to be both impactful and trusted, it is essential that pharmaceutical companies work alongside patients, advocacy groups, and other stakeholders to establish shared governance structures that elevate the patient voice and honor patient rights.

Current initiatives by independent third-party organizations are making meaningful strides towards closing the gap in patient-centered AI governance. The Joint Commission, the largest independent, evidence-based healthcare standard setting organization in the U.S., and the Coalition for Health AI (CHAI), a nonprofit organization founded by clinicians to advance responsible health AI, announced in June 2025 a new partnership to accelerate the development and adoption of AI best practices and guidance across the U.S. healthcare system, with plans for a formal AI certification for the healthcare industry in the near future.²⁰

Additionally, the National Academy of Medicine published the “AI Code of Conduct for Health and Medicine” in May 2025,²¹ aiming to ensure that AI in healthcare is used accurately, safely, ethically, and in service of better health for all. They work with the Light Collective, a patient advocacy organization that has created a framework called “AI Rights for Patients” which outlines governance rules impacting AI use in pharma.²² Industry consortia such as the Alliance for Artificial Intelligence in Healthcare (AAIH) are also advancing ethical and transparent standards for AI use.²³ However, these efforts must go further to formally include patient advocacy leadership into AI policy development and deployment.

Key Principles for Responsible AI for Patients and Pharma

1. Early Patient Engagement

Patients and patient advocates must be engaged early in AI design, development, and deployment. Reference: National Institute of Standards and Technology (NIST)²⁴

2. Transparency

Companies/organizations must clearly disclose when, how, and where AI is being used, including model sources and known data limitations. Reference: Industry-standard disclosure ethics; aligned with NIST and FDA transparency guidance²⁴

3. Equity by Design

AI solutions must be designed to reduce (not exacerbate) health disparities. Reference: National Academy of Medicine (NAM), AI Code of Conduct for Health and Medicine (2025)²¹

4. Privacy, Consent & Data Security

AI systems must adhere to strict consent, privacy, and cybersecurity protections, especially when handling sensitive health data. Reference: National Health Council (NHC) position on FDA governance and cybersecurity (2025)²⁵

5. Validation & Monitoring

AI tools must be validated and carefully monitored to prevent false or misleading outputs (“hallucinations”) in clinical or regulatory contexts. Reference: FDA Draft Guidance (Jan 2025): Considerations for the Use of AI in Regulatory Decision-Making²⁶

6. Cultural Competence & Bias Awareness

AI must be designed and evaluated for cultural competence and fairness to prevent reinforcing systemic bias. Reference: Ethical AI frameworks and academic literature (NAM, CHAI)²⁰’²¹

DKI Health’s Point of View: AI Cannot Replace the Patient’s Lived Experience

AI’s strength lies in its ability to operate at scale and speed—but it is the authenticity of real human stories that builds trust and drives policy change.

Across the advocacy ecosystem, there is a growing concern that AI may be used to replace, rather than support, the human roles that build trusted connections with patients and communities. This fear is especially pronounced among community health workers, nurse navigators, and patient advocacy groups’ staff, as well as medical science liaisons (MSLs) and field representatives working within pharmaceutical companies—all of whom see their work as relationship-based, not transactional.

Digital representations—such as AI-generated avatars or synthetic patient stories—are becoming increasingly common in healthcare communication. While these tools offer new ways to reach communities that are historically underserved, they also raise important concerns about authenticity, trust, and consent. If these representations are not transparently disclosed or co-designed with actual patient communities, they risk undermining the very trust they are intended to build. Opinions across the industry are mixed; some view these tools as efficient and scalable, while others worry they may cross ethical lines.

At DKI Health, we believe that digital tools and AI-generated content, when co-created with patients, can expand reach, personalize education, and protect privacy without compromising authenticity or ethics.

As one of our advisors put it, “AI isn't a doctor, and it’s not always 100% accurate.” The human element must remain central. Without human intervention and creativity, AI-generated work risks becoming generic, repeating what it has seen before and failing to represent diverse perspectives. This is particularly dangerous in healthcare, where large language models (LLMs) have shown to under-represent affected individuals and reflect or exaggerate systemic bias. A 2025 systematic review in Nature Medicine found that 22 out of 24 studies (91.7%) identified demographic biases in LLMs used for medical tasks, with racial and gender biases especially prevalent.²⁷

AI technology must be shaped and trained to amplify empathy, not to substitute it for the sake of efficiency. Lived experience, trust, and human connection cannot be automated.

Importantly, there is a limited window of opportunity between now and the next few years to establish how AI will shape and support the future of patient advocacy initiatives. Regulators such as the U.S. Food and Drug Administration (FDA) are beginning to expect pharmaceutical companies to use smaller language models (SLMs) that are fit-for-purpose and designed to be transparent, patient-friendly, and risk-aware. These smaller models can be quickly fine-tuned for specific geographies, languages, patient populations, or therapeutic areas. They also require significantly less computational power than traditional large language models (LLMs), aligning with the industry’s growing commitment to energy sustainability. Notably, smaller models can often operate on-premises or within private cloud environments, thereby reducing the need to expose sensitive patient data to large third-party platforms.²⁸

This urgency is underscored by developments in the tech sector, such as Meta’s expressed ambition to build Artificial General Intelligence (AGI). Sometimes referred to as “superintelligence,” AGI describes an AI that can reason, learn, and act across a wide range of tasks at or beyond human cognitive levels—without human guidance.²⁹ Google, founding member of the Coalition for Health AI (CHAI), has also signaled a commitment to developing AGI by the year 2030, underscoring the importance of embedding safety and ethical standards in the future of healthcare AI.³⁰ As this frontier approaches, patient advocates and pharma alike must ensure that the human element is not left behind.

Our vision is to co-create a bold new AI governance model with pharmaceutical industry partners and patient communities—one grounded in medical ethics, patient rights, health equity, and the voices of those with lived experience. DKI Health supports development of responsible AI usage guidelines and policies that are co-designed with, and trusted by, technology experts, medical professionals, and patient communities.

“Patient advocacy organizations are a critical stakeholder…they serve to support patients and caregivers, to champion their health needs, to educate them on health AI’s opportunities, challenges, and concerns, and to provide direct representation of patient groups to inform policy and improve research design, ethics, and logistical barriers, among others.”

Recommendations for Pharma–Patient Collaborations in Using AI Technologies

To ensure that AI technologies are implemented ethically, effectively, and equitably in the pharmaceutical space, we offer the following recommendations for co-developing AI guidelines, governance frameworks, and patient-centered AI adoption across functions, in partnership with patients, physicians, and advocacy organizations:

1. Design AI Governance Frameworks and Create Policies/Guidelines That Reflect Best Practices

Independent third-party organizations are shaping responsible, patient-centered AI frameworks across the healthcare landscape. Pharma should work closely with groups such as the National Academy of Medicine, the Light Collective, CHAI, and the National Health Council to:

Incorporate best practices into AI development and deployment;

Uphold robust data governance practices, including encryption and access controls, to mitigate privacy risks;

Implement hybrid oversight models that combine automated systems with human review;

Ensure AI models are regularly updated to remain relevant and safe;

Apply change management best practices to build internal confidence and support successful adoption;

Invest in internal capacity-building by training and upskilling current employees or hiring specialized AI talent to ensure a patient-centered approach to the use of these technologies.

2. Unlock Shared AI Opportunities—Identify and Scale AI Through Strategic Partnerships With Patient Advocacy Organizations

Pharma and PAGs are using AI for similar goals—clinical research, early detection, and patient engagement—and often rely on the same tools (e.g., CytoReason, Kopa).⁴ Yet, collaboration remains limited. While several pharmaceutical companies are already working with PAGs on AI-related initiatives—from clinical trial design to patient navigation tools—there is a growing opportunity to evolve these relationships. Rather than defining AI initiatives independently and then inviting PAGs to participate, companies should expand these collaborations upstream, co-designing AI solutions with PAGs from the outset to ensure relevance, trust, and real-world impact.

Strategic partnerships with PAGs—in addition to those with medical and technology experts—can unlock deeper insights, foster trust, and ensure AI solutions are designed with (not just for) patients. These alliances also support more equitable innovation, helping pharma scale AI tools that reflect lived experience, address real barriers to care, and deliver measurable improvements in patient outcomes.

For pharma, PAGs can be strong innovative partners, and co-creating AI strategies with them ensures that technology aligns with patient priorities.

3. Integrate Patient-Centered AI Across Functions

Pharmaceutical companies are rapidly integrating AI across the enterprise, including in R&D (drug discovery, clinical trial optimization), commercial (forecasting, segmentation), manufacturing (predictive models for logistics and supply chain, quality control), and market access (pricing, reimbursement analytics). However, in most cases, companies develop and deploy AI tools without input from patients. The exception tends to be patient engagement and patient advocacy functions, where the role of patient voice is more apparent. As AI becomes more embedded in decision-making, pharma companies must broaden their commitment to patient-centricity by including patients across all functions. To put this into practice, companies should:

R&D: Involve PAGs early in the development of AI models that use real-world data (RWD), natural language processing (NLP) of patient-reported outcomes, or predictive algorithms that inform clinical trial design. This helps ensure inclusion/exclusion criteria reflect patient diversity and lived experiences. PAGs can also help identify meaningful endpoints, contextualize disease progression, and ensure AI-driven insights align with real-world patient priorities.

Commercial & Marketing: Collaborate with PAGs to review AI-generated content and patient segmentation strategies. They can provide patient stories, test messaging tools, and advise on affordability models to ensure that commercial AI systems avoid bias and reflect the complexity of real-world patient journeys. Involving PAGs in campaign development also helps ensure that language, imagery, and targeting strategies are culturally sensitive, stigma-aware, and grounded in the realities of patients' lived experiences.

Market Access: Partner with PAGs to integrate real-world patient experiences into AI models used for pricing, reimbursement, and formulary decision-making. PAGs can help validate AI-driven affordability simulations or co-develop patient-centered value frameworks that go beyond traditional cost-effectiveness metrics. PAGs can also support the identification of underserved populations, helping AI tools flag equity gaps in access models and suggest targeted policy or support interventions.

Manufacturing & Supply Chain: Collaborate with PAGs to understand real-world barriers to medication access, such as delays, shortages, or distribution inequities. These insights can inform AI models used for demand forecasting, inventory management, and geographic allocation to ensure that efficiency improvements don’t come at the expense of access or equity. PAGs can also help pharma prioritize supply chain decisions that account for the needs of rural communities, chronic disease patients, or those reliant on temperature-sensitive therapies.

Cross-Functional, Multi-Disciplinary Governance: Establish a formal corporate advisory group that includes representative patients from diverse communities alongside technology and medical experts. This group can provide feedback across all departments using AI—from drug development to post-market surveillance—to ensure that human values are embedded in algorithms and decision-making processes.

AI Systems and Model Design: Build explainability (XAI), transparency, and fairness into all AI tools, even those not regulated by GxP or HIPAA. PAGs can help validate these systems, ensuring trust is maintained when AI operates behind the scenes. Involving PAGs in model testing and review helps surface blind spots, assess real-world relevance, and confirm that algorithmic decisions align with patient values—especially when those decisions affect communication, risk prediction, or access pathways.

Conclusion: A Call to Preserve the Heart of Advocacy in the Age of AI

AI is undeniably powerful—but it cannot replace the power of lived experiences, authentic relationships, or the trust built through human connection.

PAGs are cautiously leaning into AI. Most are beginning with low-risk applications; others are developing usage policies, educating their teams, and participating in industry collaborations—signaling a shift from experimentation to strategy.

This moment presents a unique opportunity for pharmaceutical companies to support not only new AI-based tools but also to help PAGs build capacity to use existing AI technology more effectively. This includes prioritizing co-design with patients and committing to principles of data integrity, transparency, and informed consent.

True innovation in healthcare happens when technology serves humanity. AI must (and can) do more than automate workflows. While accelerating drug discovery (for example) is an important application of AI technology, AI should support patients throughout their entire healthcare journey, improving access and outcomes.

AI is becoming increasingly complex and more widely adopted across sectors. PAGs are actively evolving in their approaches—embracing digital tools and data-informed strategies—which positions them as essential partners in ensuring that AI solutions are truly patient-centric. Because open-source data is constantly learning and evolving with every use, patient advocates must actively participate in shaping AI systems, ensuring that these tools are designed to work for patients.

The urgency to act is clear. As AI capabilities evolve rapidly—with new technologies like smaller language models (SLMs) gaining regulatory focus and ambitious advances toward “superintelligence” (AGI) on the horizon—the healthcare ecosystem is shifting in ways that demand thoughtful, patient-centered leadership. Pharmaceutical companies and patient advocates have a unique opportunity and responsibility to co-create AI systems grounded in empathy, equity, and partnership. The time is now to write this next chapter together, with real voices leading the way.

Citations

Business Reporter. Big pharma bets on AI to speed up clinical trials. 2025. https://www.business-reporter.com/ai--automation/big-pharma-bets-on-ai-to-speed-up-clinical-trials

Crohn's & Colitis Foundation. CytoReason and the Crohn’s & Colitis Foundation Forge Groundbreaking Data Collaboration to Advance IBD. Published June 11, 2024. https://www.crohnscolitisfoundation.org/cytoreason-and-the-crohns-colitis-foundation-forge-groundbreaking-data-collaboration-to-advance-ibd.

Mindera Health. Mind.Px: Personalized Treatment for Psoriasis. https://minderahealth.com.

DKI Health Analysis. Surveys and interviews conducted with the DKI Health Patient & Caregiver Advisory Council, May–June 2025. Presented at: Chief Patient Officer Summit; July 2025; Boston, MA.

National Bleeding Disorders Foundation. NBDF and ATHN Collaboration. Published November 29, 2023. https://www.bleeding.org/news/nbdf-and-athn-collaboration

EY. AI-powered healthcare: How medtech and pharma companies can capture the AI opportunity. 2025. https://www.ey.com/content/dam/ey-unified-site/ey-com/en-in/pdf/ey-medtech-industry.pdf

BenevolentAI. BenevolentAI achieves further milestones in AI-enabled target identification collaboration with AstraZeneca. Press release. 2024. https://www.benevolent.com/news-and-media/press-releases-and-in-media/benevolentai-achieves-further-milestones-ai-enabled-target-identification-collaboration-astrazeneca/

Drug Discovery Trends. AI drug development: Janssen’s use of Trials360.ai and beyond. 2024. https://www.drugdiscoverytrends.com/ai-drug-development-janssen-target-discovery-and-beyond/

Supply Chain Brain. AI in pharma supply chain: Aera Technology and Merck KGaA case study. 2024. https://www.supplychainbrain.com/ext/resources/directories/files/37cc3941-96c5-47e8-9477-1904282a4d4c.pdf?1552600590

Viz.ai. Announces Agreement with Bristol Myers Squibb on Hypertrophic Cardiomyopathy (HCM); March 3, 2023. https://www.businesswire.com/news/home/20230303005086/en/Viz.ai-Announces-Agreement-with-Bristol-Myers-Squibb-to-Enable-Earlier-Detection-and-Management-of-Suspected-Hypertrophic-Cardiomyopathy-HCM

Fierce Pharma. Biogen teams with Happify Health on AI-powered MS patient engagement platform. July 2022. https://www.fiercepharma.com/marketing/biogen-teams-happify-health-ai-powered-ms-patient-engagement-platform

Tempus. Using data and AI/ML to achieve precision medicine. 2024. https://www.tempus.com/resources/content/articles/using-data-and-ai-ml-to-achieve-precision-medicine/

Veeva Systems. Modular content powers omnichannel engagement at speed and scale. 2023. https://ir.veeva.com/investors/news-and-events/latest-news/press-release-details/2023/Modular-Content-Powers-Omnichannel-Engagement-at-Speed-and-Scale/default.aspx

PR Newswire. ODAIA delivers AI agent in Veeva CRM for pharma sales reps to drive better HCP engagement. 2025. https://www.prnewswire.com/news-releases/odaia-delivers-ai-agent-in-veeva-crm-for-pharma-sales-reps-to-drive-better-hcp-engagement-302251234.html

Veeva Systems. AI comes to Vault CRM and other industry advances. 2025. https://www.veeva.com/eu/blog/veeva-commercial-summit-europe-ai-comes-to-vault-crm-and-other-industry-advances/

PixieBrix. Top AI Officers of 2025. Published 2025. https://www.pixiebrix.com/reports/top-ai-officers-of-2025.

International Alliance of Patients’ Organizations (IAPO). EczemaLess: Helping Patients Manage Atopic Dermatitis with AI. IAPO; 2022. https://www.iapo.org.uk/news/2022/aug/23/eczemaless-helping-patients-manage-atopic-dermatitis-ai

Robbins R. Startups are using artificial intelligence to help patients fight insurance denials. STAT. Published December 12, 2024. https://www.statnews.com/2024/12/12/startups-using-artificial-intelligence-fight-insurance-denials-health-tech

Goktas P, Grzybowski A. Shaping the future of healthcare: Ethical clinical challenges and pathways to trustworthy AI. J Clin Med. 2025. https://pubmed.ncbi.nlm.nih.gov/40095575/

The Joint Commission and Coalition for Health AI. The Joint Commission and Coalition for Health AI join forces. Press release. June 2025. https://www.jointcommission.org/resources/news-and-multimedia/news/2025/06/the-joint-commission-and-coalition-for-health-ai-join-forces

National Academies of Sciences, Engineering, and Medicine. An artificial intelligence code of conduct for health and medicine: Essential guidance for aligned action. 2025. https://nam.edu/our-work/programs/leadership-consortium/health-care-artificial-intelligence-code-of-conduct/

Light Collective. Collective Digital Rights for Patients v1.0. March 2024. https://lightcollective.org/wp-content/uploads/2024/03/Collective-Digital-Rights-For-Patients_v1.0.pdf

Alliance for Artificial Intelligence in Healthcare (AAIH). About AAIH. https://www.theaaih.org/about.

National Institute of Standards and Technology (NIST). NIST AI 100-1: Patient engagement in AI design. 2025. https://doi.org/10.6028/NIST.AI.100-1

National Health Council. NHC submits comments on FDA draft guidance on AI to support regulatory decision-making for drugs and biologics. 2025. https://nationalhealthcouncil.org/letters-comments/nhc-submits-comments-on-fda-draft-guidance-on-ai-to-support-regulatory-decision-making-for-drugs-biologics

U.S. Food and Drug Administration (FDA). Artificial intelligence in drug development. Updated January 2025. https://www.fda.gov/about-fda/center-drug-evaluation-and-research-cder/artificial-intelligence-drug-development

Nature Medicine. Systematic review of bias in large language models for healthcare. 2025. https://pmc.ncbi.nlm.nih.gov/articles/PMC12137607/

U.S. Food and Drug Administration (FDA). Considerations for the use of artificial intelligence to support regulatory decision-making for drug and biological products [Draft guidance]. January 6, 2025. https://www.fda.gov/media/184830/download

Singh J. Meta deepens AI push with ‘Superintelligence’ lab, source says. Reuters. June 30, 2025. https://www.reuters.com/business/zuckerbergs-meta-superintelligence-labs-poaches-top-ai-talent-silicon-valley-2025-07-08/

Hartmans A.Google predicts AGI by 2030 and emphasizes alignment with ethical AI standards. Axios. Published May 21, 2025. https://www.axios.com/2025/05/21/google-sergey-brin-demis-hassabis-agi-2030

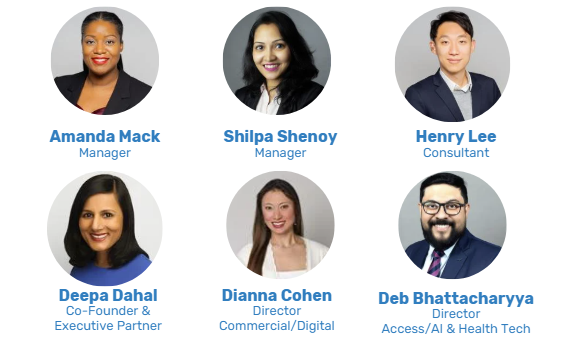

Contributors

Acknowledgements

DKI Health would like to thank our Patient & Caregiver Advisory Council Members who shared their insights and provided input into the recommendations:

Courtney Bugler, CEO and President, Zero Prostate Cancer

Phil Gattone, CEO, National Bleeding Disorders Foundation

Jen Grand-Lejano, Managing Director of Advocacy, American Cancer Society Cancer Action Network

Christian John Lillis, Co-Founder and CEO, Peggy Lillis Foundation

Mary Kemp, Director of Grassroots Organizing, American Cancer Society Cancer Action Network

Sue Koob, CEO, Preventive Cardiovascular Nurses Association

Neda Milevska Kostova, Chair of AMR Patient Alliance and Immediate Past Chair, International Alliance of Patients’ Organizations (IAPO)

Alisha Lewis, Sickle Cell Disease and Rural Health Advocate

Kenneth Lowenberg, Co-Founder, The Talk About It Company

Joe Nadglowski, President & CEO, Obesity Action Coalition

Arya Singh, SMA Expert Patient and Advocate

Denise Smith, Inaugural Executive Director, National Association of Community Health Workers

Disclaimer

This white paper was developed by DKI Health, a strategic consultancy specializing in patient advocacy and responsible healthcare innovation. The insights and analysis presented are based on research, expert interviews, and data available at the time of publication. However, artificial intelligence (AI) technologies and their applications in healthcare are evolving rapidly. New developments may emerge that shift the landscape described in this report.

We are committed to closely monitoring changes in the field and will continue to update our guidance as the regulatory environment, ethical frameworks, and use cases evolve.

For inquiries or to discuss how these developments may affect your organization, please contact us at www.dkihealth.com